Organizations continuously have to cope with enormous volumes of data produced from many sources. To harness the power of this data and derive valuable insights, it is crucial to have a well-structured and efficient data pipeline architecture. Amazon Web Services (AWS) provides a comprehensive suite of tools and services to build scalable and reliable data pipelines.

Here, we will discuss AWS data pipeline architecture, strategies to build an effective data pipeline architecture in AWS, and more.

In this article

Part 1: Introduction to Data Pipeline Architecture in AWS

AWS Data pipeline architecture is a critical component of any organization's data infrastructure. It involves the process of moving and processing data from various sources to the desired destination, such as a data warehouse, data lake, or analytics platform. AWS offers a range of services and tools that enable organizations to design, build, and manage robust data pipelines that are scalable, reliable, and cost-effective.

One of the key advantages of using AWS for data pipeline architecture is the flexibility and scalability it offers. Organizations can leverage AWS services to build data pipelines that can handle large volumes of data, process it in real time, and scale up or down based on the changing demands of the business. This flexibility is crucial for organizations that need to process and analyze vast amounts of data to derive meaningful insights and make informed decisions.

Part 2: Strategies to Build an Effective Data Pipeline Architecture in AWS

Building an effective AWS data pipeline architecture requires careful planning, design, and implementation. There are several strategies that organizations can employ to ensure that their data pipelines are efficient, reliable, and scalable.

- Define Clear Objectives and Requirements: The first step in building an effective data pipeline architecture is to define clear objectives and requirements. This involves understanding the specific use cases, data sources, processing requirements, and desired outcomes. By clearly defining the objectives and requirements, organizations can design data pipelines that are tailored to their specific needs and can deliver the desired results.

- Choose the Right AWS Services: AWS offers a wide range of services that can be used to build data pipelines, including Amazon S3, Amazon Redshift, Amazon Kinesis, AWS Glue, and Amazon EMR, among others. It is essential to choose the right combination of services that best meet the requirements of the data pipeline architecture.

- Design for Scalability and Reliability: Scalability and reliability are critical considerations when designing data pipeline architecture in AWS. Organizations should design their data pipelines to be scalable, allowing them to handle increasing data volumes and processing requirements.

Part 3: Exploring Big Data Pipeline Architecture

Big data pipeline architecture in AWS involves handling and processing large volumes of data, often in real-time, to derive valuable insights and support data-driven decision making. Building an effective big data pipeline architecture requires specialized tools and techniques to handle the unique challenges posed by big data, such as high velocity, variety, and volume.

One of the key components of big data pipeline architecture in AWS is the use of distributed computing and storage technologies, such as Amazon EMR (Elastic MapReduce) and Amazon S3. Amazon EMR allows organizations to process large-scale data using frameworks such as Apache Hadoop, Apache Spark, and Presto, while Amazon S3 provides scalable and durable storage for big data.

Part 4: Creating an AWS Data Pipeline Architecture Diagram with EdrawMax

Wondershare EdrawMax is the ultimate solution for creating an AWS Data Pipeline Architecture Diagram. It offers a wide range of tools and features that make it the best tool for the job. Its user-friendly interface, flexible templates, and powerful export options make it the go-to choice for professionals. With EdrawMax, you can easily design and present a clear and concise representation of your AWS data pipeline architecture. The following are the steps to you should follow to make your diagram:

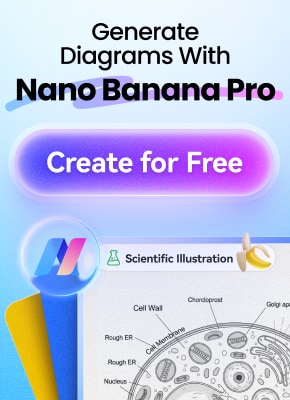

Step 1:

Sign in to your EdrawMax account first. Following a successful login, the EdrawMax dashboard will be displayed to you.

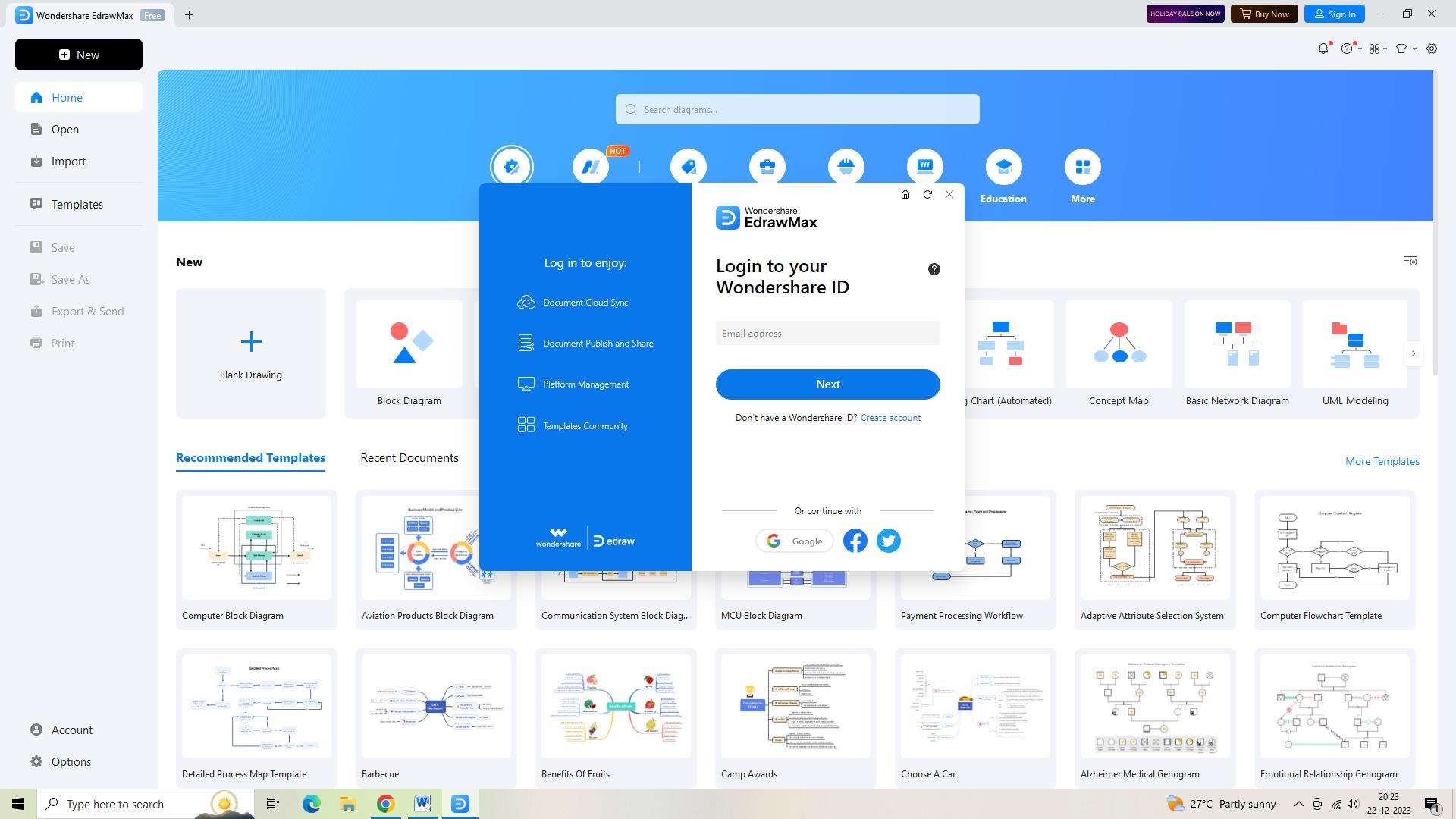

Step 2:

After that, launch a brand-new EdrawMax document. To accomplish this, click the "New" button. This will provide you with a blank canvas on which to draw your architectural diagram for the AWS data pipeline.

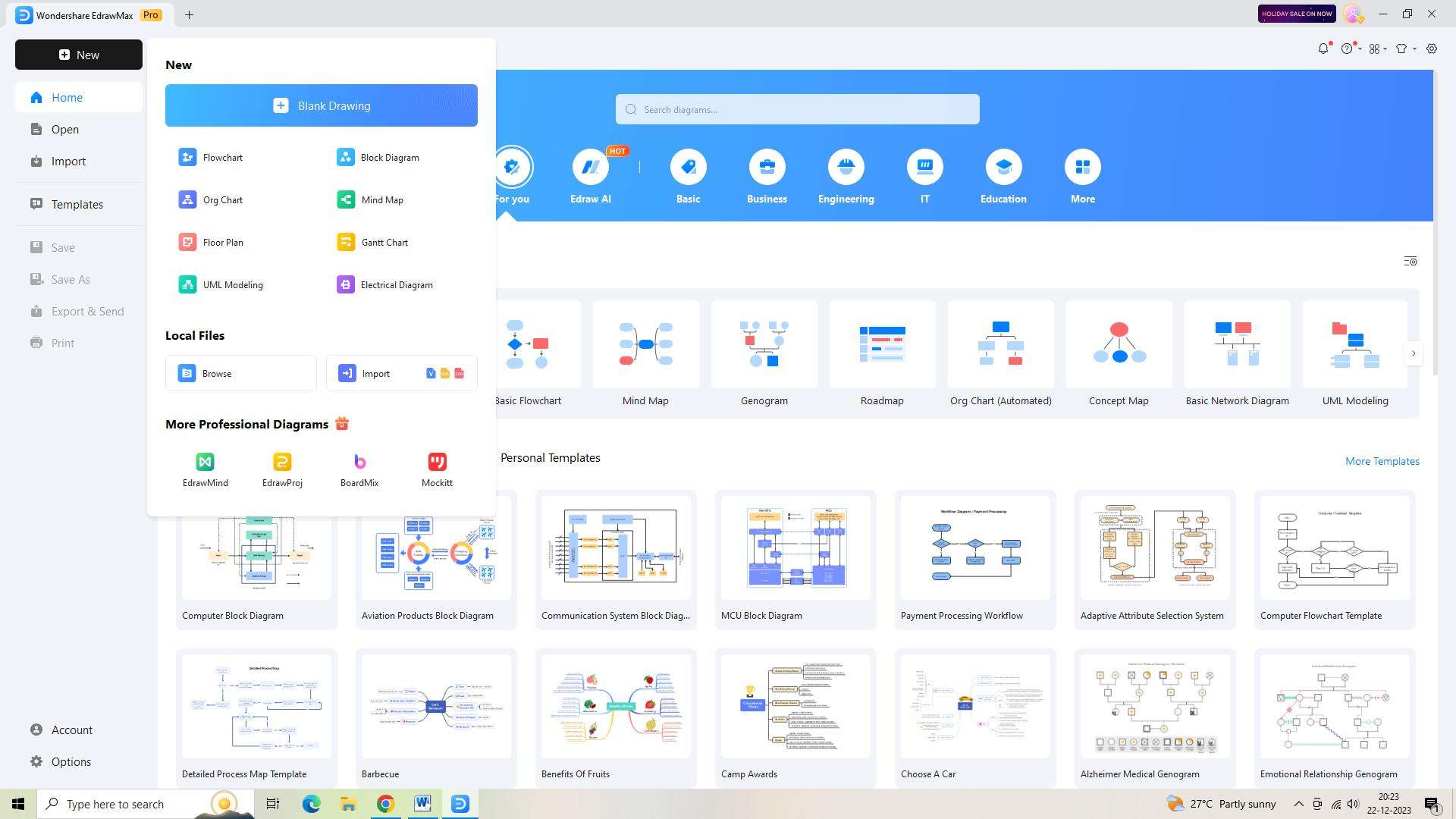

Step 3:

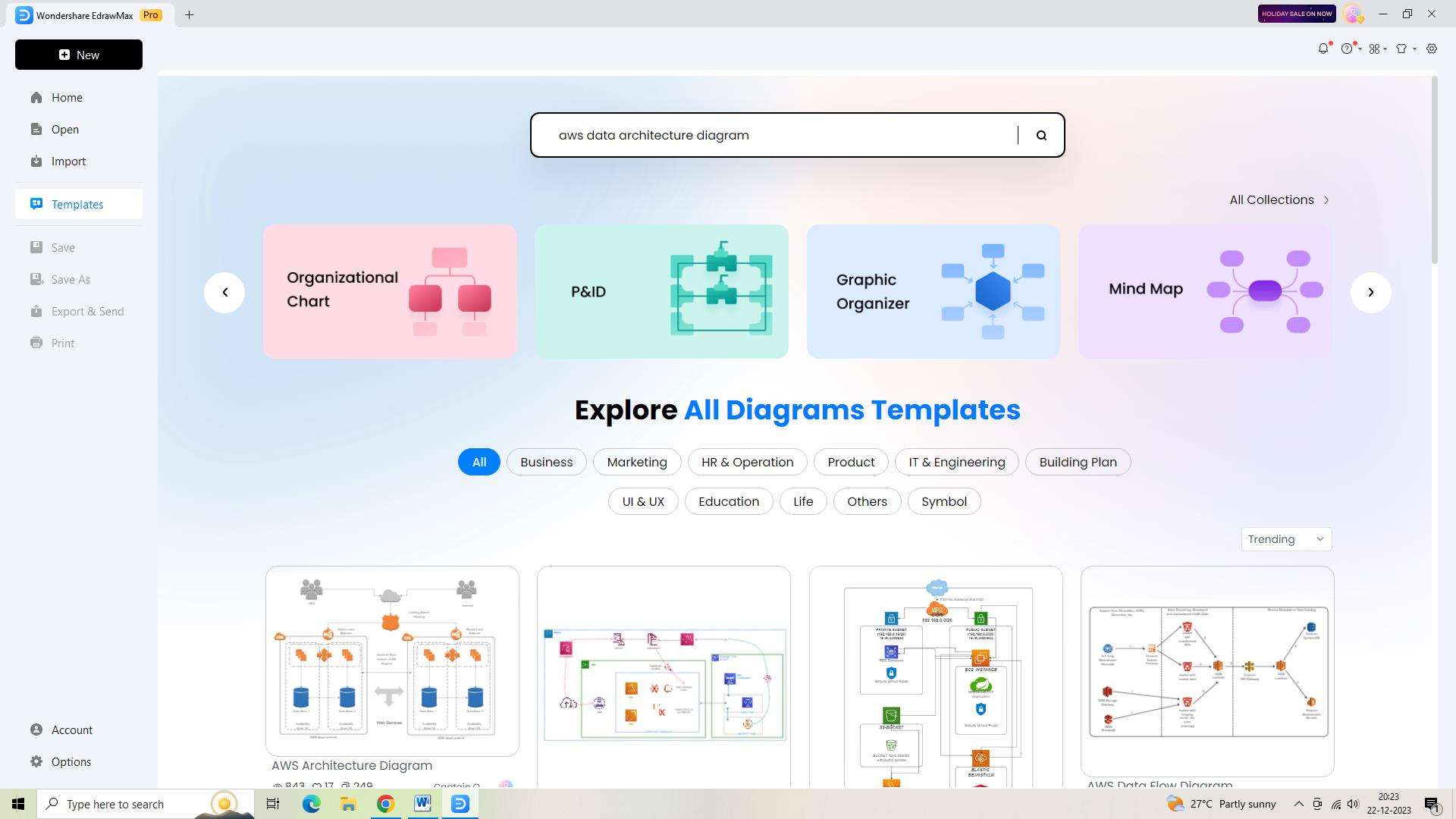

The ideal AWS data pipeline architecture diagram template should now be found. To get the template, just enter "AWS data pipeline architecture diagram" into the search box and choose it.

Step 4:

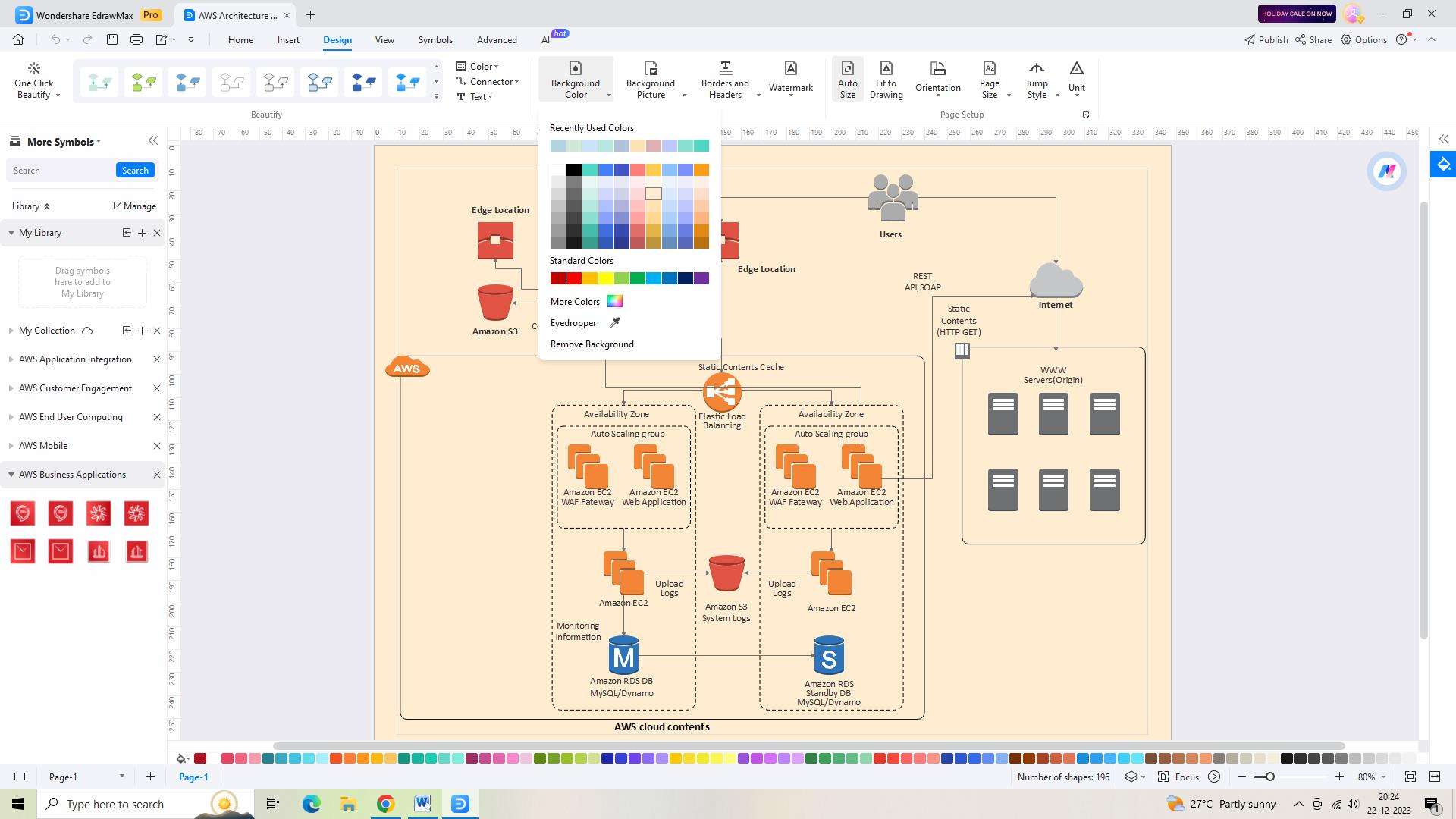

After deciding on a template, you may begin adjusting the diagram to meet your unique requirements. To personalize your diagram, EdrawMax offers a plethora of tools and functionalities in an intuitive interface.

Step 5:

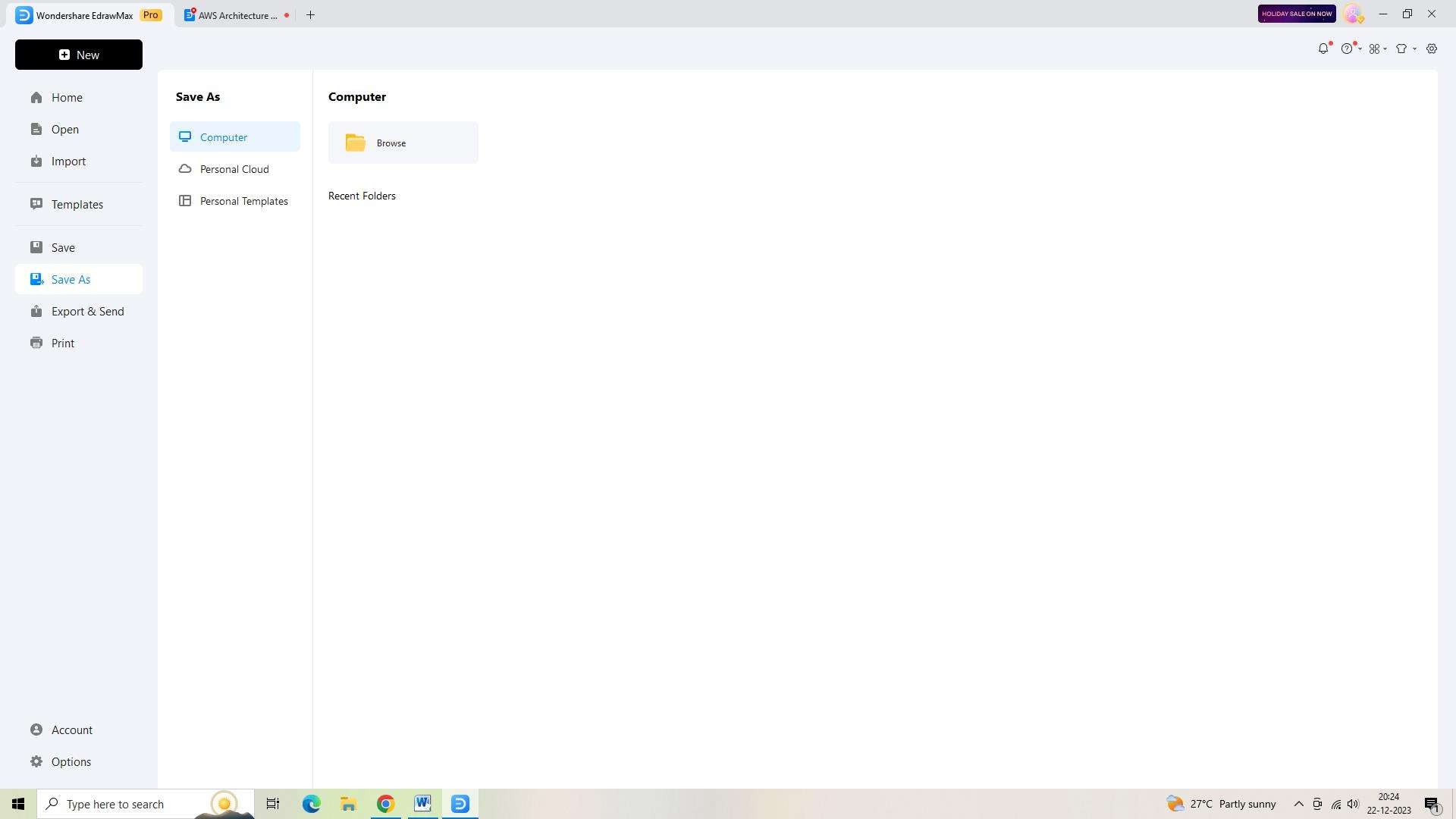

Saving your work as you go is usually a smart idea. Click the "Save AS" icon located in the upper toolbar to save your AWS data pipeline architecture diagram.

Step 6:

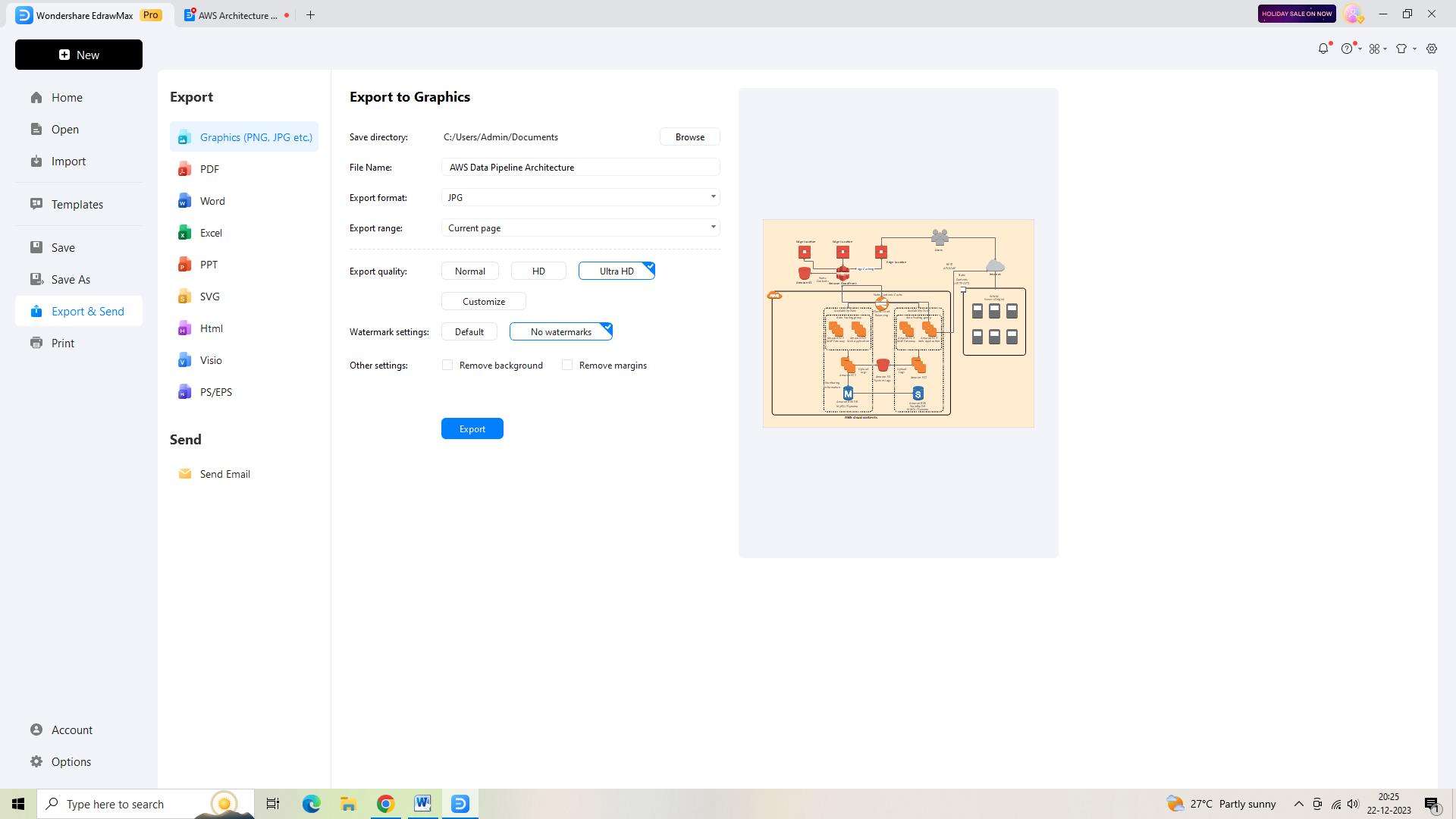

Exporting your diagram is now necessary. Your AWS data pipeline architecture design may be exported from EdrawMax in a number of file types, including PDF and JPEG. To export, just click the 'Export' icon in the top toolbar and choose the file format you want.

Conclusion

AWS data pipeline architecture is a critical component of any organization's data infrastructure, enabling the movement and processing of data from various sources to the desired destination. By employing strategies such as defining clear objectives, choosing the right AWS services, designing for scalability and reliability, implementing data security and compliance, and monitoring performance, organizations can build effective data pipeline architectures in AWS.